A few years ago, the idea of letting artificial intelligence (AI) tweak a production mainframe system on its own would’ve made even the most daring system programmer break out in a cold sweat. We would have heard, “Let the machine make the change? Not on my watch.”

The mainframe world has always been built on a foundation of precision, predictability, and an almost reverent respect for the power of the platform. You didn’t “try things.” You tested, double-checked, documented, and then—maybe—hit Enter.

But something’s changing.

In the 2025 BMC Mainframe Survey, we asked hundreds of mainframe professionals about their willingness to trust AI to manage tasks across development, operations, and data management. We expected caution, skepticism, maybe polite curiosity. What we got instead surprised us.

The Spectrum of Trust

We asked respondents to picture AI handling some everyday but mission-critical mainframe tasks—things like checkpoint/commit pacing, problem diagnosis, and determining when or what to back up. For each, they had four options:

- Not at all. “No way, not letting AI touch that.”

- Alert to the need for action. “AI can tell me something’s wrong.”

- Recommend an action. “AI can advise me what to do.”

- Complete the action. “AI can fix it itself.”

The results were eye-opening.

For checkpoint/commit pacing frequency, only 9 percent said “not at all.” A quarter were comfortable with alerts, 37 percent said “recommend an action,” and 28 percent said “complete the action.”

For problem diagnosis, 8 percent said “not at all,” 25 percent said “alert,” 37 percent said “recommend,” and 32 percent, nearly a third, said they’d let AI complete the task.

And for determining when or what to back up, 9 percent said “not at all,” 24 percent said “alert,” 39 percent said “recommend,” and 28 percent said “complete.”

“Trust doesn’t remove oversight—it redefines it.”

When we saw these numbers, there was a pause in the virtual room—the kind where everyone scrolls back to make sure the data isn’t reversed. Because for decades, automation on the mainframe has been viewed as an assistant, not a replacement. The notion that roughly one in three mainframers is ready to let AI act—not just advise—represents a turning point.

We call this progression the “Spectrum of Trust”. It’s the journey from AI as an advisor, to AI as a partner, to AI as an operator. And it’s happening faster than anyone expected.

What’s Driving This Shift?

To understand why this change is happening, it helps to look beyond the technology itself—to the people behind the screens.

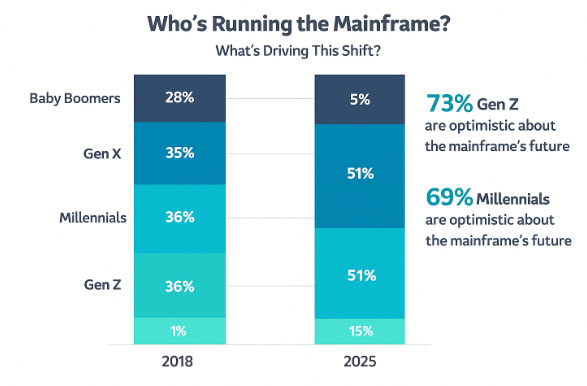

In 2018, the mainframe workforce was dominated by Baby Boomers and early Gen Xers. Our latest survey shows just how dramatically that landscape has evolved. Millennials now make up 51 percent of the mainframe workforce, up from 36 percent seven years ago. Gen Z—the new kids on the data center floor—has jumped from 1 percent to 15 percent. Gen X has declined slightly from 35% in 2018 to 29% in 2025. And the Baby Boomer presence has dropped from 28 percent to 5 percent.

It’s a generational shift unlike anything the platform has seen. The new people maintaining today’s mainframes grew up with smartphones, voice assistants, and on-demand everything. They’re not intimidated by AI—they’re expectant.

Among Gen Z respondents, 73 percent believe the mainframe will continue to grow and attract new workloads. Among Millennials, 69 percent say the same. That optimism is powerful. When you believe the platform has a future, you’re willing to embrace the tools that will keep it relevant.

The data tells a story not just of demographic change but of cultural change. Mainframers are still deeply risk-averse when it comes to uptime, security, and performance—but they’re becoming more adventurous when it comes to innovation. They’re starting to see AI not as an interloper, but as a collaborator.

The Rise of the Agentic Mainframe

At the heart of this evolution lies something new: agentic AI.

Unlike traditional AI that simply analyzes data or generates recommendations, agentic AI can take action. It follows workflows, monitors results, and learns from feedback. Think of it as a digital coworker that doesn’t just point to a problem—it rolls up its virtual sleeves and fixes it.

On the mainframe, that means shifting from manual and semi-automated operations to agentic workflows: systems where AI agents handle the repetitive, rules-based, or time-sensitive tasks that once consumed hours of human attention.

Let’s take problem diagnosis as an example. In the past, diagnosing an issue might have involved scanning logs, checking thresholds, cross-referencing performance monitors, and running scripts. Today, an AI agent can aggregate telemetry, detect anomalies, identify probable causes, and even implement remedial actions—all while keeping the human operator in the loop.

Or consider backup decisions. Instead of relying on static schedules, AI agents can analyze workload patterns, predict data-change frequency, and dynamically adjust what and when to back up—optimizing resources while maintaining compliance.

The outcome? Fewer mundane alerts, fewer manual interventions, and far more time for mainframe professionals to focus on architecture, modernization, and business outcomes.

In short, AI isn’t replacing the mainframer—it’s freeing them.

“Free the Human”

That phrase—Free the Human—came up repeatedly in our internal workshops as we analyzed these survey results. It captures the spirit of what’s happening across Dev, Ops, Data, and Storage.

Everywhere you look, teams are drowning in repetitive work. Developers spend hours trying to understand unfamiliar COBOL modules. Operators babysit routine performance tuning. Storage administrators juggle backup windows like air-traffic controllers.

AI isn’t replacing the mainframer; it’s freeing them.

AI agents can take those burdens and handle them consistently, accurately, and around the clock. When mainframers talk about “trusting AI to complete the action,” they’re not giving up control—they’re reclaiming their time.

This isn’t blind faith; it’s earned trust. The same way autopilot doesn’t remove pilots from the cockpit, agentic AI doesn’t remove experts from the mainframe. It just handles the flight path so the experts can focus on navigation, innovation, and the bigger mission.

Why Now?

Three converging forces are accelerating this shift:

- Data availability. Mainframe systems now generate and share more telemetry than ever—metrics, logs, SMF data, traces—that can feed AI models.

- Processing power. Hybrid AI frameworks allow inferencing on-platform or off-platform, integrating z/OS data with cloud-based LLMs and analytics engines.

- Cultural readiness. The workforce turnover mentioned earlier means a growing proportion of professionals who trust automation instinctively and see it as an enabler.

Combine those forces, and you get fertile ground for agentic workflows. The same operators who once triple-checked JCL jobs now monitor dashboards that visualize AI recommendations and actions. Instead of debugging COBOL manually, developers use generative AI assistants to explain unfamiliar code in natural language. Instead of manual post-mortems, AIOps tools automatically analyze logs and summarize root causes.

What was once science fiction—AI acting autonomously in the mainframe environment—is quickly becoming standard operating procedure.

A Day in the Life of Tomorrow’s Mainframer

Imagine a morning in the not-so-distant future.

You log in to your monitoring console. Overnight, your AI agent detected an anomaly in checkpoint pacing. It simulated three potential adjustments, executed the safest one, validated throughput improvements, and documented the change with a full audit trail.

Meanwhile, another agent flagged a Db2 query running long. It recommended a tuning adjustment, provided a performance forecast, and waited for your approval. You glance through the summary, see the logic, and click “approve.”

Later, a generative AI assistant explains the purpose of an obscure COBOL module you’re debugging, even highlighting potential test cases. A third agent finalizes a dynamic backup schedule for end-of-quarter workloads.

By noon, you realize you’ve spent the day strategizing, not firefighting. That’s what “freeing the human” feels like.

The Trust Transition

Trust doesn’t appear overnight. It’s built through transparency, accountability, and results.

Mainframe professionals know that every automation layer introduces new risks. A misplaced command, a misinterpreted alert, a runaway script—these can have costly consequences. So the path toward full agentic automation isn’t about flipping a switch; it’s about gradual progression along the spectrum.

- Step 1: Let AI alert you to issues faster than humans can spot them.

- Step 2: Let AI recommend actions and back them up with explainable logic.

- Step 3: Let AI take limited actions within strict guardrails.

- Step 4: Expand scope as confidence and evidence grow.

That’s why we see the percentages distributed the way they are: roughly a quarter comfortable with alerts, a third comfortable with recommendations, and another third ready for completion. It’s an evolution in motion, not a revolution overnight.

The key enabler here is explainability. When AI agents can articulate why they acted, mainframe teams move from suspicion to supervision. AI doesn’t need to be infallible—it needs to be understandable.

Governance Still Matters

Let’s be clear: autonomy without accountability is a non-starter in the mainframe world.

Agentic workflows must operate under robust governance frameworks—complete with approval checkpoints, audit logs, rollback capabilities, and compliance verification. Mainframe pros live in a world of SLAs, SOX, and regulatory oversight; they can’t afford “black box” decisions.

That’s why successful AI adoption depends on visibility. Every recommendation, every completed action, every model output should be traceable and reviewable. Only then can organizations truly trust AI as a teammate.

It’s also why the new generation of mainframe leaders will need hybrid skill sets: part technologist, part data scientist, part risk manager. Tomorrow’s operations center might include roles like “AI Supervisor,” “Automation Auditor,” or “Trust Officer”—positions that bridge the gap between human oversight and machine autonomy.

Generational Synergy

There’s another dynamic at play here—one that’s less about code and more about culture.

As Baby Boomers retire, they leave behind deep institutional knowledge: unwritten rules, performance tuning tricks, and decades of intuition. Losing that expertise is a real concern. But AI can help capture and preserve it.

Generative AI systems can absorb documentation, JCL examples, and decades of system logs to replicate that institutional knowledge in natural language form. A new developer can literally ask, “Why did we choose this checkpoint frequency?” and get an answer distilled from historical context.

In that sense, AI becomes the bridge between generations—passing down expertise in a way that’s searchable, explainable, and always available. It’s mentorship at scale.

The combination of veteran knowledge and next-generation openness is what makes the current era so exciting. It’s not about replacing one generation with another; it’s about connecting them through AI.

Why the Mainframe Is the Perfect Testbed

If you think about it, the mainframe might actually be the ideal environment for agentic AI to prove itself.

It’s stable, governed, and data-rich. Its workflows are well-defined and auditable. Every change has a control point. These characteristics make it far safer to pilot automation here than in chaotic, unstructured environments.

And mainframe teams already understand discipline. They know how to test, validate, and back out changes if needed. So as AI takes on more operator roles, the mainframe’s inherent rigor becomes its best defense.

Rather than fearing AI’s rise, mainframe pros can treat it as the next generation of automation—one that operates with reasoning instead of rigid scripts.

The Human Element

All this talk of automation can sound clinical, but the truth is deeply human.

Every time a mainframer trusts AI to complete an action, it’s an act of courage. It’s saying, “I believe this system can help me, not hurt me.” It’s also an act of humility—recognizing that intelligent automation can process data faster, spot anomalies sooner, and optimize performance more efficiently than even the most seasoned expert.

But the most human part is what happens next: rediscovery of creativity. Once freed from the grind, mainframe professionals rediscover the joy of problem-solving, the curiosity that drew them to technology in the first place.

Where the Spectrum Leads

The trajectory is clear. Over the next few years, more mainframe tasks will move along the spectrum—from alerts to recommendations to completions. Agentic AI will become not the exception but the expectation.

We’ll see AI agents diagnosing issues before anyone notices an outage, dynamically tuning databases, orchestrating recovery processes, even coordinating with cloud environments to maintain hybrid efficiency.

Human oversight will remain central—but the nature of that oversight will evolve. Instead of executing every command, humans will design the rules, validate the logic, and interpret the outcomes.

That’s the future of trust.

The Bottom Line

The data from the BMC 2025 Mainframe Survey paints a picture of a community in transition—one that’s younger, more optimistic, and increasingly comfortable sharing control with intelligent systems.

The “Spectrum of Trust” isn’t just a statistic—it’s a mirror. It reflects how mainframe professionals see themselves and their role in the age of AI.

The question isn’t whether AI will be trusted to complete actions

The question is: What will you do with the time it gives back to you?

Because in the end, this shift isn’t about technology—it’s about people. It’s about freeing the human so the human can do what only humans can: imagine what’s next.

Find more expert insights on AI in mainframes.

0 Comments