Unlocking the Value of Cheryl Watson’s Tuning Letter Back Catalog

If you work with IBM mainframes, you’ve probably heard of Cheryl Watson’s Tuning Letter, which I now have the pleasure of editing. For decades, it’s been one of the most trusted resources for performance tuning, capacity planning, and in-depth insights into the evolving world of z/OS and IBM Hardware.

One of the most valuable benefits of a subscription is full access to the entire back catalog—a treasure trove of mainframe knowledge stretching back decades.

Why does this matter?

The back issues contain decades of expert guidance and practical tuning tips. While some technologies change, others stay the same. They document how IBM technologies have evolved, providing a perspective that helps your team make better decisions today. It’s a working toolkit.

In this article from Cheryl Watson’s Tuning Letter archives, Sally Mir dives into Db2 SMF type 100 records to see what we can learn about Db2 buffer pools. Db2 buffer pools are likely the largest single user of memory in your z/OS systems, and their efficient operation is key to Db2’s ability to service your business. We hope you benefit from its insights.

-Mark Wilson, Editor, Cheryl Watson’s Tuning Letter

The Basics of Db2 Buffer Pools

What is a Db2 buffer pool? Basically, it is an area of virtual storage in the DBM1 address space that houses pages containing data from Db2 objects. A page must be in the buffer pool in order for Db2 to use it. Db2 page sets containing tablespaces and index spaces can be categorized as having 4K, 8K, 16K, or 32K page sizes, depending on the bufferpool they are assigned to.

When Db2 needs a page that is not already in the buffer pool, it must perform an I/O to disk and can either retrieve one page, 32 pages (most often for a 4K pageset), or up to 128 pages in some utility operations. Write operations can write up to 32 pages at a time, depending upon the page size.

Every Db2 subsystem has several buffer pools, with at least one for each page size. Objects should be placed into those buffer pools based on various characteristics. Buffer pools can be configured in different ways to handle different read/write behaviors. The metrics to pay attention to when tuning buffer pools are: the hit ratio, page residency time, and read I/O rate.

The metrics to pay attention to when tuning buffer pools are the hit ratio, page residency time, and read I/O rate.

Random Requests

Db2 data can be accessed randomly or sequentially, depending on the processing being performed by the associated applications. We will cover random access first.

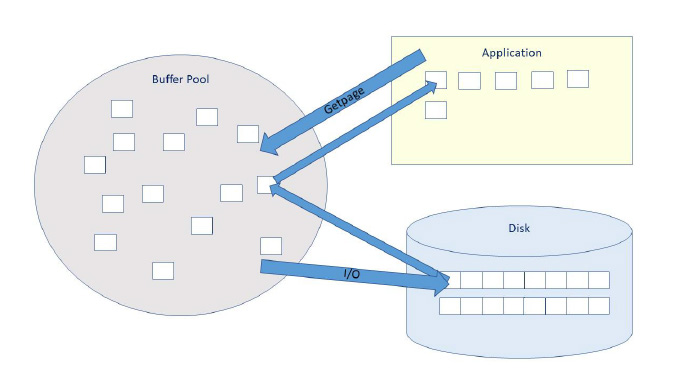

Figure 1 – Db2 Buffer Pools – The Big Picture

Figure 1 shows the path a single page takes when an application requests some data that is not already in the buffer pool. When an SQL SELECT statement is executed, a getpage request is issued in order to get the rows and columns from the appropriate page. In this example, Db2 find that the page is not in the buffer pool, so it must go to disk to perform a synchronous I/O to read the page, which is returned to the buffer pool, and then to the application.

This is a synchronous process from the perspective that the application must wait for these operations to complete before it can proceed. Obviously, it is far more efficient for the application if the pages it wants are already in the buffer pool. I/O to disk is expensive, both in CPU and in elapsed time.

Basically, the ratio of not-found pages to total getpages is called the hit ratio. (Technically, it is ((getpage_requests – pages_not_in_bp – pages_read_from_disk) / (getpage_requests – pages_not_in_bp)) * 100.). A high hit ratio is one indicator of an efficient buffer pool, but there are other indicators that are even more important than that: page residency time and read I/O rate are also important metrics to consider when tuning a buffer pool.

The hit ratio is the ratio of not-found pages to total getpages. The formula is ((getpage_requests – pages_not_in_bp – pages_read_from_disk) / (getpage_requests – pages_not_in_bp)) * 100.).

Pages can be requested and accessed either in a random pattern or a sequential pattern. Our example above shows the random access pattern, where one page at a time is requested, and in no particular order. Whereas random pages are accessed synchronously, sequential pages may be accessed by an asynchronous process called prefetch, which brings in multiple pages with one I/O. Prefetch is obviously more efficient than random synchronous I/Os, since the pages are brought into the buffer pool ahead of the time the application actually needs them.

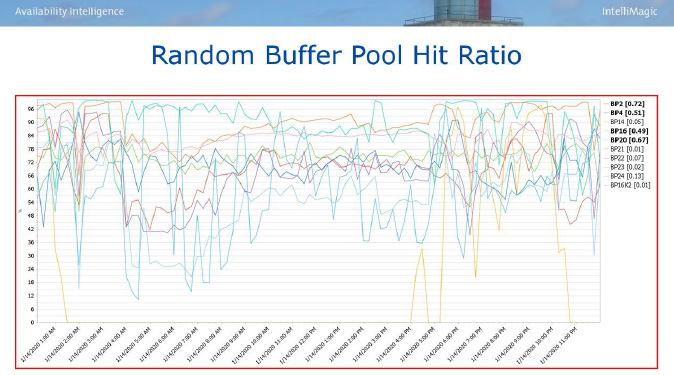

Figure 2 – Db2 Subsystem-Level View of Buffer Pool Hit Ratios Over Time (© IntelliMagic)

Figure 2 shows random buffer pool hit ratios for several buffer pools in a data sharing member. Many of them drop well below 50%, which is enough to trigger an exception in IntelliMagic Vision’s Health Insights. These buffer pools appear to need some attention because of the wide variation in the hit ratio throughout the day!

Random getpages can be difficult to tune because they depend on application behavior. Sometimes, if the buffer pool were made larger, the pages it contains would be able to remain there for a longer period of time. This might provide the opportunity to avoid another I/O if the page is re-referenced in the future.

On the other hand, the page may never be re-referenced, so making the buffer pool larger will just increase memory consumption, but not improve performance. It is recommended to enlarge the buffer pool incrementally to see if performance improves, and stop when it reaches a plateau.

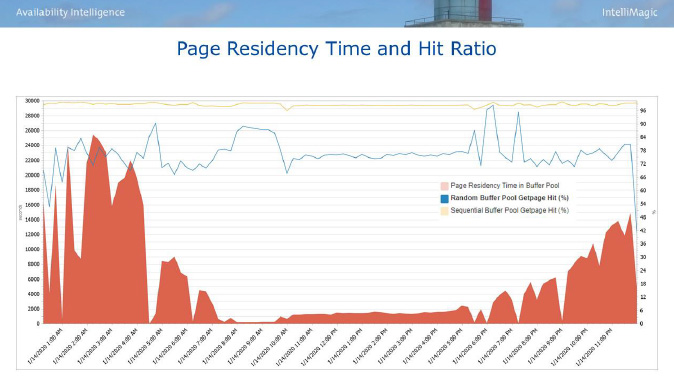

Figure 3 – Key Metrics for One Buffer Pool for One Day (© IntelliMagic)

Figure 3 displays the relationship between the getpage hit ratio and the page residency time (the average amount of time a page resides in the buffer pool) for one buffer pool over a 24-hour period. With the relative bump in the random getpage hit ratio at around 8 AM, the page residency time went much lower. The hit ratio then returned to normal and the page residency time increased a bit. This indicates that the pages that were in the buffer pool were sufficient to keep the random getpage hit ratio generally the same, even though they did not reside in the buffer pool for a long period of time. What does this mean to us? Well, in Db2 the answer is always, “It depends.” We must look at the actual amount of I/O work that is occurring during that period.

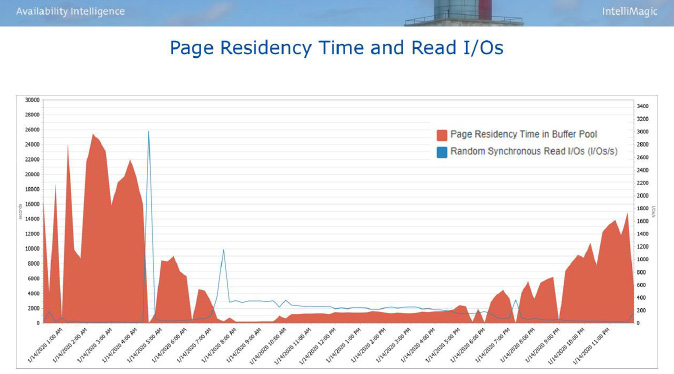

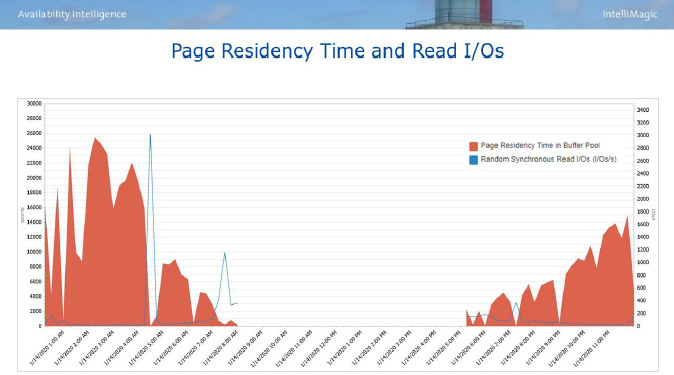

Figure 4 – Relationship Between Page Residency and Read I/O Rates (© IntelliMagic)

We can expect page residency time and random read I/O rate to have an inverse relationship, and in Figure 4 that is what we see here. If there are already sufficient pages in the buffer pool, then fewer physical I/Os will need to be performed, and the inverse is true as well. It is possible that by increasing the size of this buffer pool we could increase the page residency time and reduce the number of physical I/Os necessary to process the data during the online day. The random hit ratio would improve as well across the board.

However, the only way to know for sure is to increase the size and observe the behavior.

Figure 5 – Buffer Pool Batch Shift Activity (© IntelliMagic)

But look at the behavior in the early and late shifts in Figure 5. Just for an example, if we had a buffer pool that looked like this throughout the entire day, increasing the size of the buffer pool would probably not help performance, since the residency time is already high and the I/O rate is already low. Would this help the hit ratio? Probably not much. And it wouldn’t matter that much if it did, because the more important metrics are the page residency time and the I/O rate. Increasing the buffer pool would just be using more memory for no real benefit.

Consider a typical transactional system. Online transactions are quick, usually running just a handful of SQL statements. This would be using random access. The tables they access can be extremely large, but the data would usually be current data, or the most popular data. So the random pages would most likely be re-referenced many times throughout the day. It benefits us to keep them in the buffer pool for as long as possible.

However, when batch processes run, they run through large amounts of data, hopefully in the order in which the table is clustered (sequentially). Pages are typically accessed once, and not re-referenced. It does not benefit us to keep them in the buffer pool for long.

Sequential Requests

We’ve discussed random I/O, but we have barely touched on sequential I/O. Sequential I/O almost always uses an asynchronous prefetch mechanism that brings in multiple pages at one time. There are three types of prefetch: sequential prefetch, dynamic prefetch, and list prefetch.

Since many pages are asynchronously brought into the buffer pool in one I/O, prefetch is always substantially less expensive than performing individual random one-page I/Os. But bringing in such large amounts of data we run the risk of flooding the buffer pool with pages that will most likely not be re-referenced.

To protect you from sequential pages from pushing all your random pages out of the buffer pool, Db2 provides parameters that can be used to allow the random pages to remain in the buffer pool for a longer period of time.

Db2 provides parameters that can be used to allow the random pages to remain in the buffer pool for a longer period of time.

Some of those parameters are fixed and some are updatable. They are expressed as a percentage of the buffer pool that contains “dirty” pages – that is, pages that have been updated but not written to disk yet. The fixed thresholds, when exceeded, should be taken very seriously.

The fixed threshholds are:

- Prefetch Threshold, fixed at 90%, will disable all prefetch activity when 90% of the pages in the buffer pool are dirty. All of the benefits of asynchronous reads will be lost.

- Data Management Threshold, fixed at 95%, will cause Db2 to perform a getpage for every row read by the application, even if every row it needs to read is in the same page. This greatly increases the number of getpages performed.

- Immediate Write Threshold, fixed at 97.5%, will cause updated pages to be written synchronously out to disk.

Obviously, it is not desirable to reach any of these thresholds.

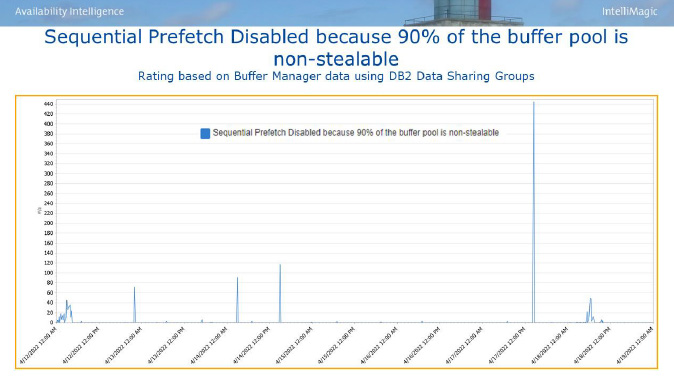

Figure 6 – Impact of Too-Small Buffer Pool (© IntelliMagic)

Figure 6 shows an example of a buffer pool that repeatedly exceeds the fixed Prefetch Threshold. The solution for this is to enlarge the buffer pool, or possibly to move some of the objects into another buffer pool. Designing buffer pools and assigning the placement of objects should go beyond creating a handful of buffer pools and taking the default thresholds.

Object Placement

We can go even deeper into the buffer pool by analyzing the objects that are placed there. Perhaps the objects that are producing the behavior in the day shifts in our example above are not the same objects that are being used during the off-shifts. By reassigning the objects that have different access attributes into different buffer pools, we can tune the buffer pools individually by setting certain thresholds to make the best use of the memory assigned to them.

Of course, this takes meticulous work and observation, and a lot of shops don’t have the staff to go this deeply into buffer pool tuning. However, there may well be some low-hanging fruit that you can go after that will deliver real benefits.

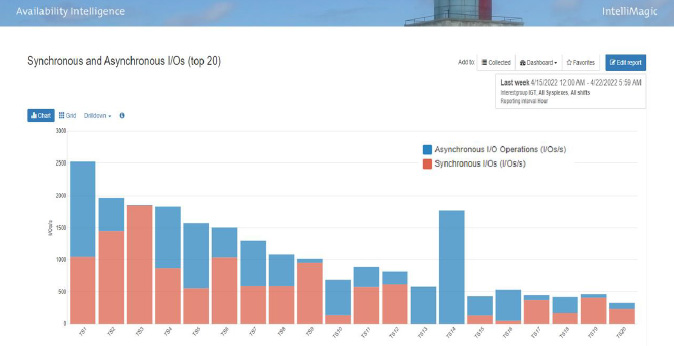

Figure 7 – Db2 I/O Rates by Buffer Pool (© IntelliMagic)

In Figure 7, we combine SMF 42 with SMF 102 records to see synchronous and asynchronous accesses to disk for pagesets by buffer pool. We can see that TS3 is all synchronous I/O, while TS13, TS14, and almost all of TS16 are all asynchronous. Perhaps they could be separated into separate buffer pools. Generally, this should be the practice. It might be worth the research to see if that would improve buffer pool performance. This is where the buffer pool hit ratio and page residency rate would be useful in determining the best approach.

Keep in mind that this chart shows sync and async accesses to disk, not all sync and async getpages. The sync I/Os could possibly represent pages that were read once and then kept in the buffer pool for a long period of time.

There are other buffer pool threshold settings that are updateable that control the behavior of random and sequential pages. The Sequential Steal Threshold (VPSEQT) controls the percentage of the buffer pool that is allowed to be occupied by sequentially accessed pages. This allows for space to be held for random (synchronous) pages, which will be kept longer in the buffer pool. The Deferred Write Threshold (DWQT) is the percentage of the buffer pool that can hold unavailable (“dirty”) pages before they are written out to disk. When this threshold is reached, Db2 asynchronously writes out the pagesets with the oldest updated pages, until the number of available buffers reaches 10% below the threshold. And the Vertical Deferred Write Threshold (VDWQT) is similar to DWQT, except it is granular at the pageset level.

Db2 Latch Suspensions

We’ve all heard about Db2 locks, but there is another serialization mechanism called a latch. Locks are used to control transaction concurrency; latches are there for another reason — consistency. They provide for serialization of resources, and they are broken out into many different latch classes. Obtaining and releasing latches usually happens extremely quickly; if everything is running smoothly, they are unnoticeable. But sometimes we encounter significant latch suspension time that is caused by activities in a buffer pool.

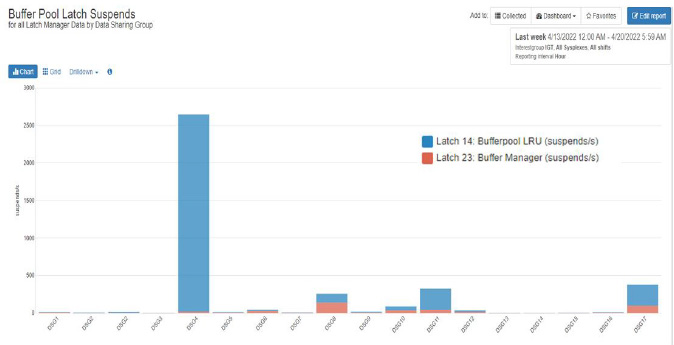

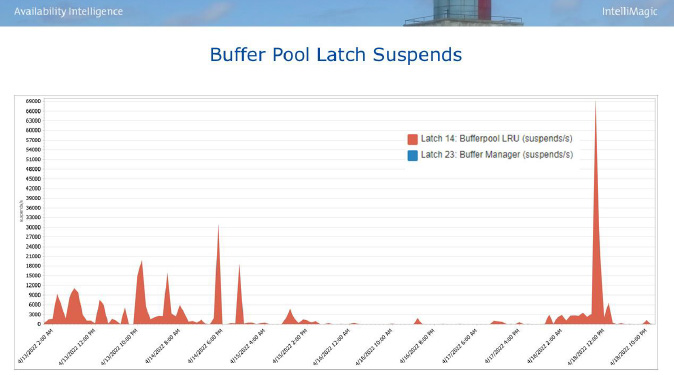

Figure 8 – Investigating Db2 BP Latch Suspends – Part 1 (© IntelliMagic)

The chart in Figure 8 indicates that one of the data sharing groups is experiencing a large number of Latch Class 14 suspensions. The X axis represents various data sharing groups, and the Y axis is the number of suspensions that occurred. Latch Class 14 describes latches within the buffer pool on the LRU hash chains.

By default, pages are removed from a buffer pool on a Least Recently Used (LRU) algorithm. This is most often the best way to manage the removal of pages, because it results in the frequently-used pages remaining in the buffer pool, therefore increasing the page residency time and reducing the I/O rate. The buffer manager must manage the chains of pages, which, of course, takes resources.

Figure 9 – Investigating Db2 BP Latch Suspends – Part 2 (© IntelliMagic)

Looking further into the latch suspensions, we drill down to a view over time as shown in Figure 9. We can see above that the suspensions happen throughout most of the day, with a few large spikes. To reduce the number of latch suspensions in this case, increase the size of the buffer pool in order to decrease hash contention. Also, objects that are frequently referenced could be split out into other buffer pools.

Occasionally, there may be a situation where a First-In, First-Out (FIFO) buffer management methodology is more suitable, so PGSTEAL(FIFO) could be used for such a buffer pool.

This would reduce the number of latches taken, because there is no LRU chain to manage. This can be used in a buffer pool where no or very little I/O is occurring. All pagesets assigned to the buffer pool can fit into it completely.

There is one more setting available to us, which is PGSTEAL(NONE). This is possible due to today’s large amount of memory on Z machines. A buffer pool defined with the PGSTEAL(NONE) setting is also called a contiguous buffer pool.

Today’s large buffer pools

We’ve discussed the workings of Db2 buffer pools, and the above contains the basics. With today’s ability to create very large buffer pools along with the use of page fixing and large frames, a lot of these buffer pool considerations can now be seen as less important.

Because of this, large buffer pool configurations can be standardized across multiple environments in a shop, and possibly fewer buffer pools created within each Db2 subsystem. This simplifies the administration of Db2 for systems programmers and DBAs.

Of course, it is very important to make sure that there is enough real storage to back all buffer pools, but considering the very large memory available on the latest processors, it is much easier to accommodate Db2’s needs for lots of memory than ever before.

Page Fixing and Large Frames

To use a page in a buffer pool for a read or write operation, it first must be fixed in memory to preserve it from being paged out during the operation. In order to avoid the cost of fixing and releasing buffer pool pages for every I/O, we can use the PGFIX(YES) option.

This fixes all pages in that buffer pool, which saves CPU cycles and time, and can be a great tuning opportunity for buffer pools with high read/write activity. Db2 will not allow PGFIX(YES) buffer pools to exceed the real storage capacity of the machine. After 80% of capacity is reached, Db2 will change a PGFIX(YES) buffer pool to PGFIX(NO), so be very sure that you have enough real storage to back these fixed buffer pools. Otherwise, things can go south very quickly.

Taking the benefits of page-fixing the buffer pools one step further, Db2 can make use of large frame sizes. Using 1 MB or 2 GB frames along with PGFIX(YES) relieves some of the virtual-to-real address translation. The frame size and maximum number of frames is set in the LFAREA parameter in the IEASYSxx member of SYS1.PARMLIB. Fixed 1 MB frames will by default back PGFIX(YES) buffer pools if they are available. The use of 2 GB frames should be reserved for truly enormous buffer pools.

Fixed 1 MB frames will by default back PGFIX(YES) buffer pools if they are available

The PGSTEAL(NONE) buffer pool, also known as the contiguous buffer pool in Db2 12, is one that can be used in very specific cases. When an object that is assigned to this buffer pool is first opened, all of its pages are pre-loaded into the buffer pool. The contents of the buffer pool are laid out exactly as they are on disk. The pool must be large enough to hold the entire object, as well as up to 6400 additional buffers to be used as overflow buffers. This should be used for objects that have a predictable size and that are highly-referenced.

Pages that do not fit into the contiguous area are placed in the overflow buffers, where they remain until the pageset is closed. Pages in the overflow area are managed with LRU chains; pages in the contiguous area do not need to use pool management processes because of the way they are laid out in the buffer pool.

This type of arrangement can provide significant performance improvements over the traditional non-page-fixed, non-large-frame buffer pool; however, sizing it correctly is extremely important in order to take advantage of the benefits. PGSTEAL(NONE) is only available for use with 4 KB and 1 MB frames; if 2 GB is specified, Db2 will use 4 KB frames instead.

Buffer Pools in a nutshell

From the beginning, Db2 has intelligently used storage in order to reduce the amount of physical I/O required to retrieve and save Db2 pages. More and more tuning knobs have been added over time, helping to manage sequential vs. random I/O, page stealing algorithms, differing page sizes, and eventually page fixing and large frames.

As the mainframe evolves, so does Db2. As today’s business applications grow into sizes never dreamed of in the early days of Db2, Db2 evolves to handle reading and writing data more and more efficiently. Professionals who work with this DBMS should be evolving, too, keeping up with the latest innovations to take advantage of the power that Db2 offers us.

References

If you would like to know more about the topics covered in this article, the following materials might be helpful:

- Robert Catterall’s blogs: https://robertsdb2blog.blogspot.com/

- IBM Redbook, Subsystem and Transaction Monitoring and Tuning with DB2 11 for z/OS, SG24-8182

- IBM Redbook, IBM Db2 12 for z/OS Performance Topics, SG24-8404

- IBM Redpaper, IBM DB2 11 for z/OS Buffer Pool Monitoring and Tuning, REDP-5092

- IBM Manual, section titled Tuning database buffer pools in IBM Db2 12 Managing Performance, SC27-8857.

Excellent writing by Sally.