Treating Artifacts as Code in DevOps

“…there are still manual processes that take time and are error prone.”

The DevOps process relies heavily on automation of the software development lifecycle stages to achieve maximum agility while drastically shortening the time to market with new and higher quality business applications and functions.

Combining the development and operations teams can help reduce the time it takes to deploy new code from development through test and into production. But there are still manual processes that take time and are error prone.

Some have tried to address this problem by treating many of the non-code artifacts as if they were just a different type of code. While this can work if they follow a simple grammar, it doesn’t often work well with batch workflows.

What is a Batch Workflow?

“Batch workflow is another way of saying background applications programs as jobs that run in a controlled sequence.”

Batch programs may evoke a picture of 1960’s mainframes using punch cards and magnetic tape drives. In reality batch has modernized along with most other IT systems and is used by companies that process very large amounts of data that must remain consistent without any end user interaction.

Because the data may be loaded from different sources, the online systems are usually prevented from making changes during the batch run to ensure consistency.

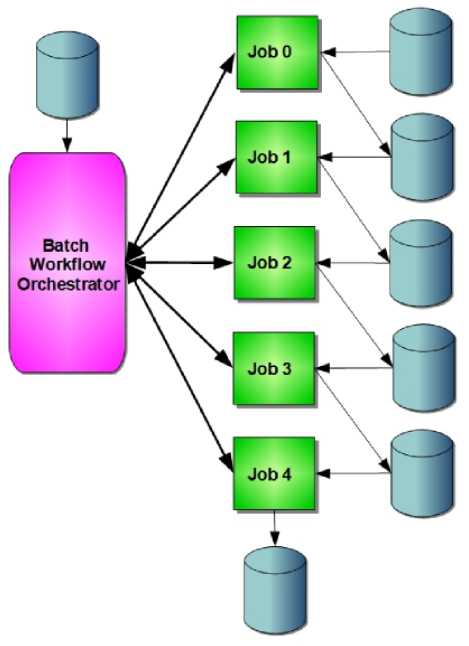

Batch workflow is another way of saying background application programs as jobs that run in a controlled sequence. You may have heard this called application workflow orchestration.

Batch Workflow Schedulers

“Schedulers use a sequence of instructions that tell them when and how to run the batch workflow.”

Tools that orchestrate the batch workflows are called schedulers. Schedulers use a sequence of instructions that tells them when and how to run the batch workflow.

The language of batch workflow schedulers varies based upon the vendor and the target system. Many job schedulers have their own integrated development environments to specify the instructions.

The process of creating a batch workflow starts with creating individual batch job definitions. A job can have many application job steps that are run sequentially and require very specific resources as input and output files.

Once the individual job definitions are completed, they can be combined into workflows that are executed by the batch workflow scheduler.

Defining the Batch Jobs

“On IBM’s zOS operating system, the jobs are defined using Job Control Language (JCL).”

While most scheduler programs offered by software vendors provide useful tools to define the interactions between the jobs that make up the batch workflow, often the actual job definitions are left to be created manually by the operations team.

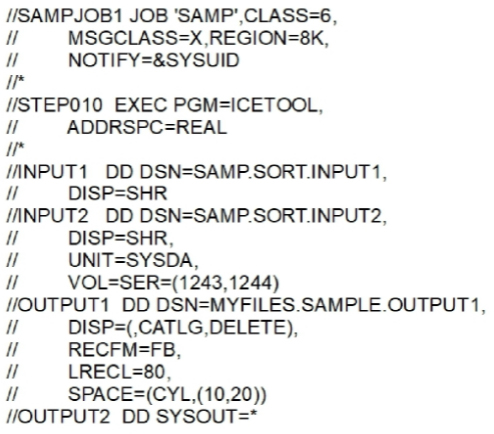

Job definitions include the name of the program to run and the resources needed to run it. On IBM’s z/OS operating system, the jobs are defined using Job Control Language (JCL).

JCL for a single job can include multiple steps that invoke different programs with unique resource requirements. Manual creation of jobs is cumbersome and error prone, thus becoming an obstacle to the agility and quality expected in a DevOps environment.

DevOps Solutions

Imagine having a single place to define not only batch jobs, but all of the scripts and scheduling artifacts needed to automate batch workflows.

Imagine instead of manual processes to define each of these components, you can define rules and variables that are used to generate the batch workflow artifacts.

Imagine the freedom to choose the batch workflow scheduler that is best suited for you without having to learn new development tools.

The best modern mainframe-based DevOps solutions eliminate many of the roadblocks to successfully using DevOps for agility and quality in batch workflows.

They use functional descriptions and your company’s in-house rules to automate release management, and can use this information to create any type of script, JCL, scheduler input and other components, regardless of the scheduler, to greatly simplify batch workflow changes.

Defining Global Variables and Rules

By using environment variables and rules as functional descriptions, a capable DevOps solution can reduce errors that are typically introduced by having to create the individual batch workflow artifacts manually.

By reducing manual errors in batch workflow artifacts, you can save considerable expense in having to perform problem determination to uncover where the error was injected and fix it.

By storing these environment variables and rules inside a secure central repository, you can quickly make changes to target artifacts.

By generating the batch workflow artifacts automatically, you can save the time it would take to create a change request and pass that along to the operations team to be manually built.

Creating and Editing Objects

The capability to browse and select objects for editing is critically important. You also need to create new objects of selected types. Jobs would contain functional description that would eventually be used to create JCL. Individual components would be reusable, and should be logged and subject to change control in case changes need to be rolled back.

Generating Batch Workflow Artifacts

From these components are used to create complex batch workflows, including the JCL, scripts, and other scheduler artifacts for many different scheduler types, including IBM Workload Scheduler, BMC Control-M, CA/7, and others. The ability to support mainframe schedulers, to generate purely distributed scheduler artifacts like CA AutoSys, $Universe Workload Automation, Visual TOM, etc., would also be beneficial.

A good solution would be able to define as many physical environments and software development lifecycle (SDL) stages and possible, including multiple test stages in your SDL. Flexibility is key for an effective solution that you hope to bolt into an existing environment.

Looking at a Generated Batch Job’s JCL

The ability to view generated JCL is also a critical component in any modern mainframe-based DevOps solution. JCL for multiple department uses (e.g., Quality Assurance and Production environments would be similar but with name changes as a part of the generation and change control processes. IT personnel can view the differences using a JCL viewer.

The value of specifying environment variables and then using them to generate jobs conforming to internal standards is superior over any manual process. The quality of JCL is automatically increased by generating it.

Putting the Jobs together in a Workflow

Jobs of course would be viewed and managed in the IBM Workload Scheduler for z/OS application to be run in a particular order as specified in the application definition. You would set this up by specifying

internal and external predecessors for each specific job in the workflow application. The actual component jobs would have already been defined.

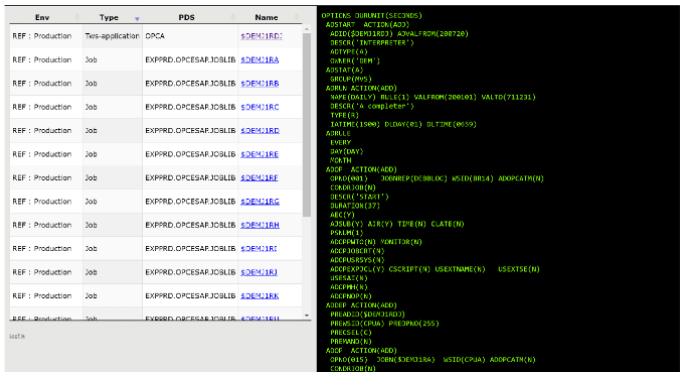

Looking at a Generated IWS Application

The partial screen shot below shows the IBM Workload Scheduler for z/OS application that was generated. This application was built from the functional model that was previously built through the DevOps solution.

You can see that the IWS application has a very specific syntax and many control statements and parameters. Getting these correctly specified the first time is very difficult. Making changes can be challenging.

A well-designed mainframe-base DevOps solution takes the risk out of making manual changes to the IWS application, and could require 75% fewer manual activities resulting in much higher quality.

The IWS application is a scheduler artifact that is used to automate batch workflows. Other schedulers use their own artifacts and terminology.

Client-Server Architecture

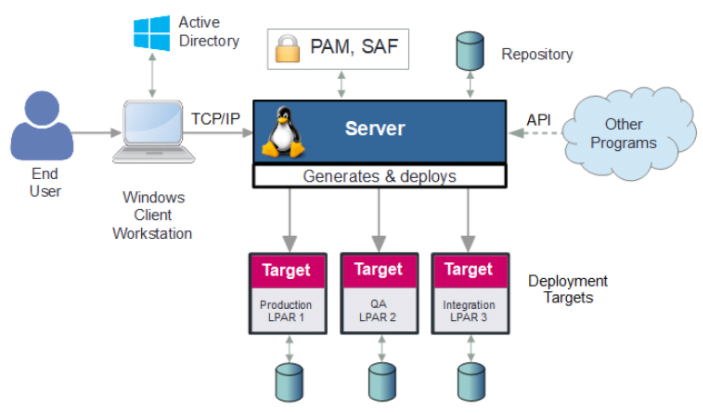

The ideal architecture for this type of solution is a client-server model. The client workstation would run in a Windows environment and connect with the DevOps server over TCP/IP. Clients should be deployable on individual workstations, shared directories, or through Citrix. APIs should be available to communicate requests programmatically to enable DevOps pipeline integration. Ideally, servers should be deployed to z/OS, Linux on IBM Z, Unix, Linux, or Windows. The diagram below shows a server on a Linux system.

Deployment targets shouldn’t have to reside on the same platform as the server. For instance, you should be able to deploy to a z/OS platform from a server running on Linux as shown in the diagram.

Agent and iPack are other ISC products that can help make the deployment process even simpler.

0 Comments