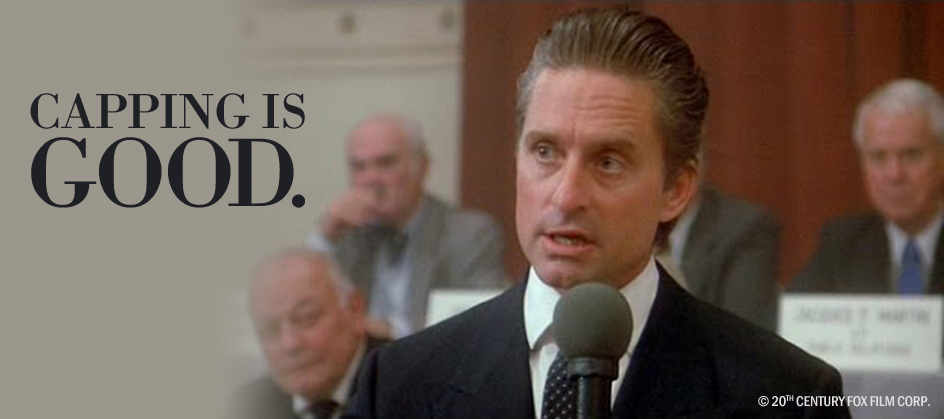

Or, Capping is no longer a bad word

Okay, yes it is – nobody in their right mind is going to cap their business-critical workloads. Yes you want to save on operational spend – in fact you need to – but not at the expense of completing business-critical workloads on time. I mean, just forget about it! Not happening. Move on.

So now that we have agreed to never cap important workloads, I think we still are interested in saving on operational expense though, right? I mean that’s a major internal pressure for everyone in the IT organization; we all know that. And with MLC costs projected to increase… no, make that expected to increase… okay they absolutely WILL increase… well, that just makes the cost control pressure even greater.

However, there are ways to do both – to safe-guard your business-critical workloads, AND to help control the cost of running those workloads – at the same time. More than that, you may actually help those workloads run faster. Sounds unbelievable, I know. Worse than that, it sounds risky.

But the truth is that you can do all of it – believe it. How? Capping. Okay, hold on, don’t stop reading. This isn’t some crazy circular argument – there are ways to do this, and to do it without risk. Critical workloads will complete on time – maybe even faster. And you’ll save on operational expense, without the risk.

So how does that work? Automation. More precisely, by automating a process that otherwise is both manual and highly risky.

IBM soft capping

Some background information – IBM’s soft capping feature was introduced in reaction to the needs of the customer base – to help control MSU costs that were running very high for some customers, especially those in growing high transaction-intensity environments.

In these environments, MSU usage and the rolling 4-hour average (R4HA) -based MLC, can be particularly spikey. Usage is not flat and predictable, but can rise steeply for a day or a few hours – resulting in very large MLC rates based on one or two resource usage spikes within a charge period. (The monthly charge is based on the maximum usage within a given month.)

IBM soft capping works. The solution helps to suppress the resource usage spikes (and the associated MLC peaks) by artificially capping usage to a given level. The problem is that the whole soft capping mechanism is largely misunderstood – and is therefore avoided for the most part, by those mainframe shops that need it the most.

Soft capping challenges

Soft capping allows you to manually set limits on MSU usage, based on past usage knowledge and some type of understanding (agreement?) on how much cost is too much. The problem is that once we start capping the critical workloads, we lose interest in cost savings. Slowing those workloads down is often politically disagreeable, and can incur increased costs in other ways – SLA non-compliance ramifications, for one thing.

On the up-side, soft capping also allows for manual intervention to make changes if the capping of certain workloads is causing problems. For example, there may be intense pressure from the finance department to control rising costs, but there can be equal or more intense pressure from other departments to prevent critical workload performance reduction. If that happens, you can go in and tweak it manually.

While effective, the manual aspect of this capability throws some risk into the equation. The process can often be laborious and time consuming, especially in complex heavy-use, multi-business unit, multi-LPAR environments – i.e., most mainframe shops. Without proper care (or expertise), the process can also become error prone, and can lead to unintended (and costly!) results.

So we have a tool that can be used to control costs, but you must allocate SysAdmin or DBA time and resources to make it effective. And you don’t have any excess in human IT capital to spend on it – who does? What can you do about this situation? Well, the answer is automation; specifically, automated soft capping.

Automated soft capping

Automated soft capping essentially takes the risk out of soft capping. There are a few soft capping automation solutions out there – the best ones are applicable to both batch and online processing, and allow you to borrow MSU resources from one LPAR and apply them to another LPAR running the critical workload(s). We’re talking about MSUs that you’re already paying for.

An automated solution dynamically and automatically controls the defined capacity for each LPAR. Each individual LPAR is fine-tuned to leverage available white space and provide capacity on demand by taking into account the capacity needs of all LPARs. Being able to dispatch additional work on a specific LPAR – while operating under the R4HA – will control your MLC while increasing capacity and throughput for critical workloads.

Of course the most important aspect of an automated solution – is the automation. The ability to change LPAR capacity settings on the fly is the key to saving on operational cost, and an automated system does that lightning-fast and error-free.

Soft capping is good when it comes without the capping part

The idea of soft capping is a no-brainer when you first think about it – anything that helps control rising costs is a great idea. The problem is the capping part. Saving operational cost is less exciting when your boss or an executive throws lightning bolts at you for lowering the performance of critical workload processing. So, how about this – soft capping, with the cost savings – but without the capping? Yeah, I want me some of that! Fortunately, that is actually a real thing. You should look into it.

Nice article Keith, Soft capping is definitely something that all mainframe shops should consider.

Keith Allingham, thanks so much for the post.Really thank you! Great.