A Novel Analogy

Whether you are looking at computer source code history or you are interested in fraud cases, everything can be seen as a story—or a novel, really, linking together the adventures of several characters in expected and sometimes unlikely ways.

More complex novels like Infinite Jest by David Foster Wallace are created by weaving individual stories together into a grand and intricate narrative. Each character’s journey is a thread in the larger narrative fabric.

But let’s say you want to understand a single character’s role in the story. The challenge is following a single thread without losing any relevant detail from the whole.

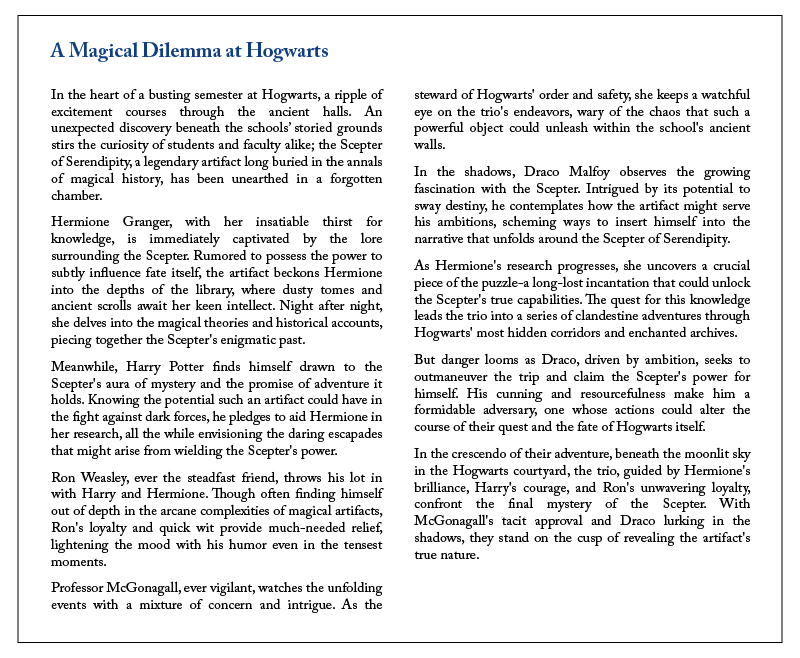

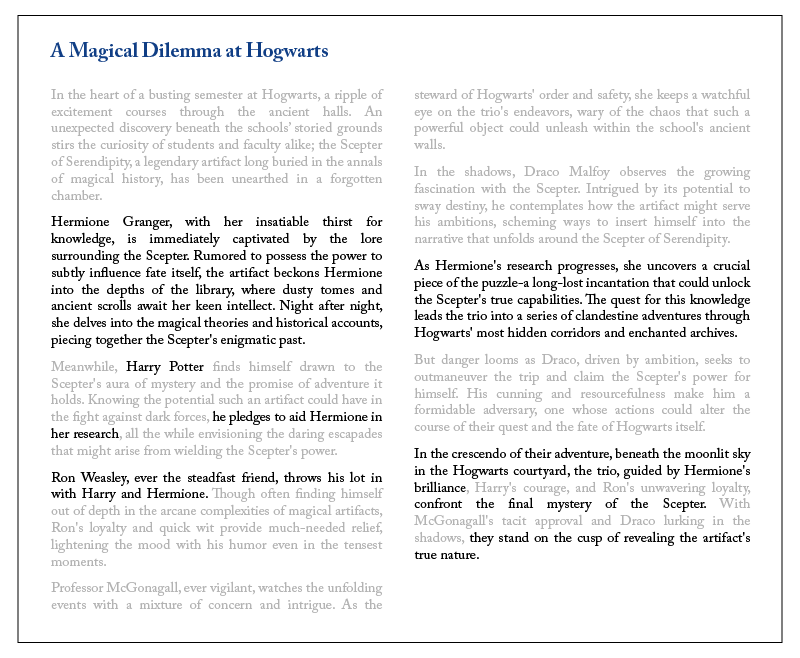

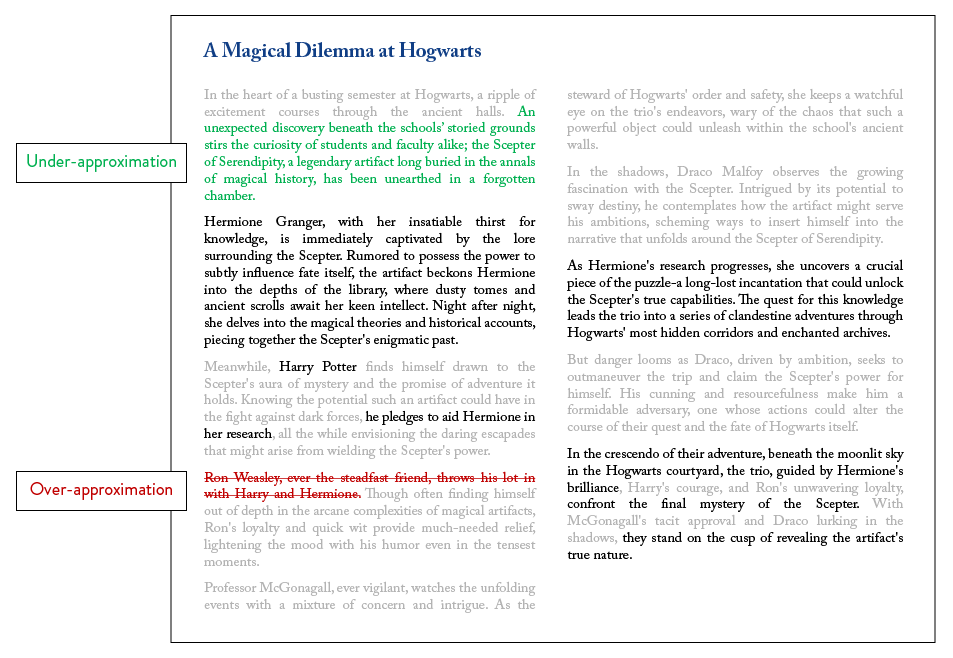

For example, you could ask AI, ”What is Hermione’s narrative in this story, in this Harry Potter text?” The first thing that AI does is extract all the elements related to Hermione from the raw data, aka the novel.

That may sound reasonable, but this approach will present two risks of bias:

1 Under-approximation

Certain parts of the story, even if they do not directly concern Hermione, have a very strong impact on her storyline. Ignoring these parts of the text eliminates critical details necessary to understand Hermione’s full story, leaving the reader with an incomplete understanding of certain decisions and outcomes.

2 Over-approximation

In contrast, some narrations, even if they are thought-provoking, only have an indirect connection with the character. In this situation, the reader expends mental energy filtering out irrelevant information.

Catastrophic interference

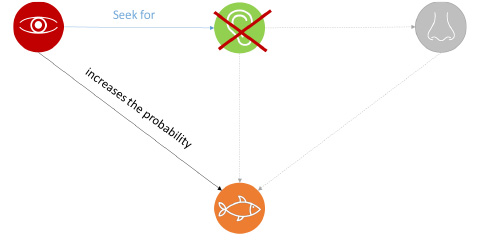

To explain catastrophic interference as an AI challenge, imagine a bear goes to a river to catch a salmon. For the bear, the view of a river, the sound of flowing water, and the distinct smell of salmon are the likely predictors for the presence of fish. The three elements – a river, flowing water, and the scent of salmon–always go together.

To fully understand this, we must remember the reinforcement learning system and understand that an AI makes its choices among the permitted possibilities by favoring what is the most probable (based on the result of attempts). See the University of Oxford research on neural plasticity for more.

If a Bear were an AI

If our bear were an AI, after multiple attempts, it would learn that the view of the river, the sound of flowing water, and the smell of salmon were elements that induced the strongest probability of success in salmon fishing. These three stimuli become naturally associated together, so AI would also link their probability of appearance to each other. Seeing the river > increases the likelihood of hearing the sound of the water > which increases the likelihood of smelling salmon>which increases the likelihood of catching salmon in the river.

But one day, the bear sees the river, but it does not hear the sound of the water – perhaps because of an ear injury. What would happen if the bear acted like an AI?

If the bear’s brain worked like an AI, the bear would find an error; the view of the river did not generate the sound as expected. So, the AI will not seek to correlate its postulate with the smell. The probability of success will not be sufficient. And even if the bear sees a river and smells fish, the AI bear will not go fishing because it cannot hear the sound, and all three elements must be present.

The deep learning reinforcement mechanism continually adjusts the likelihood of success for various inputs based on previous outcomes, regardless of whether those outcomes were successes or failures. This adaptive process is known as the backward propagation mechanism.

In the case of our bear, the case presented above will be considered a failure. As a result, artificial intelligence will reduce the impact of fish odor on the probability of success in salmon fishing. And that’s a mistake for the bear.

Neural Plasticity

One important characteristic that distinguishes the natural brain from artificial neural networks: plasticity, the ability of a brain to continually create connections between nodes (neurons). This capacity is absent from AI models; they tend to forget what they have assimilated when learning a new task.

In a very famous experiment conducted by McCloskey and Cohen in 1989, a neural network was trained to solve mathematical problems based on examples containing the number 1.

It was then fed another set of problems containing the number 2. From then on, the neural network learned to solve problems containing a 2 but forgot how to solve those containing the number 1.

Based on the data provided, the neural network dynamically created the paths between the nodes during the training phase. However, by feeding it new information, new paths were formed that did not include the original ‘1’ paths. This is what pushes an algorithm to “forget” previously constructed sequences.

Stupid Intelligence

As Yann Le Cun, a world expert in deep learning, points out, the names artificial “intelligence” and even machine “learning” are misleading. If we consider that these tools present intelligence, then it bears no resemblance to that of humans. It is hyper-specialized and not transferable from one domain to another.

This human trans-intelligence characteristic is also at the origin of something that is sorely lacking in the machine: what we could describe as “common sense.”

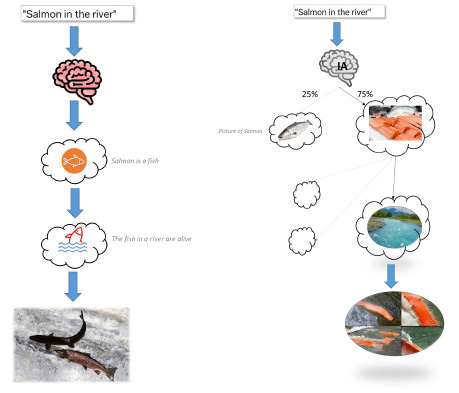

I think everyone has seen this AI fail where someone sent the prompt “Salmon in the river” to generate a photo and got the following result. Even if the result obtained is rather funny, it is certainly not what the photos were supposed to represent.

Let’s try to understand what led to this photo by taking the problem in reverse by asking the question, “Why did an AI think it was relevant to represent slices of wild salmon in a wild river to answer this question?” Was it the prompt? Was it the AI training?

For one answer, typing“salmon photo” into an internet search bar returns four times more photos of slices of salmon than photos of live salmon. Does this mean humans are more passionate about cooking than about wild nature? Maybe. What is certain, though, is that an AI will choose the slice of salmon as the most relevant for the requested prompt.

The human brain, in contrast, first filters a question by a system of “beliefs” so intrinsically linked to our thought process that it becomes implicit for us. Returning to my initial question, yes, AI technology generates erroneous beliefs.

This system of “beliefs” is difficult to reconcile with deep learning mechanisms, even for symbolic AI. That means AI remains dependent on the clarity of the initial request. In this case, a prompt “Picture of living salmon in a river” would produce the desired images.

The Art of Asking Well

Your mother was right when she told you, “Knowing how to ask correctly makes things easier!” In AI’s case, politeness is not enough. Adding “please” at the end of each of your prompts will not affect the results, unfortunately.

Although you can converse with an AI in a ‘natural language,’ there is actually nothing natural about the way you chat with an AI.

As we have seen, the thought construction of AI is quite far from human logic. This has advantages for optimizing the processes implemented. But, although you can converse with an AI in a ‘natural language,’ there is actually nothing natural about the way you chat with an AI.

The art of prompting is emerging as a new profession that aims to master the formulation of questions and statements to obtain the most relevant and precise answers.

Here’s a tip: To maximize the effectiveness of your AI prompts, include two elements: context and instructions. Adding context helps the model better understand the scenario, while the instructions tell it what you expect it to do.

The Helpfulness of AI…in Three Decades

In looking ahead to how AI will affect productivity, an excellent article by Karen Karniol-Tambour and Josh Moriarty explores how most studies predict that impacts on productivity will not occur now, or even in 2030 or 2040.

History shows that general-purpose technologies that are widely implemented have a measurable impact on productivity approximately one to three decades after the emergence of these capabilities—three decades!

Does this mean we should ignore AI? Honestly, I don’t think that’s a relevant choice. Even if your job would never be replaced by an AI, you might be replaced by a person who is helped in their activity by an AI.

This is part two of Gatien Dupré’s article on AI. Read part one.

0 Comments