There is increasing talk of quantum computers and how they will allow us to solve problems that traditional computers cannot solve. It’s important to note that quantum computers will not replace traditional computers: they are only intended to solve problems other than those that can be solved with classical mainframe computers and supercomputers. And any problem that is impossible to solve with classical computers will also be impossible with quantum computers. And traditional computers will always be more adept than quantum computers at memory-intensive tasks such as sending and receiving e-mail messages, managing documents and spreadsheets, desktop publishing, and so on.

There is nothing “magic” about quantum computers. Still, the mathematics and physics that govern their operation are more complex and reside in quantum physics.

Quantum Physics

The idea of quantum physics is still surrounded by an aura of great intellectual distance from the vast majority of us. It is a subject associated with the great minds of the 20th century such as Karl Heisenberg, Niels Bohr, Max Planck, Wolfgang Pauli, and Erwin Schrodinger, whose famous hypothetical cat experiment was popularized in an episode of the hit TV show ‘The Big Bang Theory’. As for Schrodinger, his observations of the uncertainty principle, serve as a reminder of the enigmatic nature of quantum mechanics. The uncertainty principle holds that the observer determines the characteristics of an examined particle (charge, spin, position) only at the moment of detection. Schrödinger explained this using the theoretical experiment, known as the paradox of Schrödinger’s cat. The experiment’s worth mentioning, as it describes one of the most important aspects of quantum computing. Supposing there is a cat in a closed box where a mechanism (with which the cat obviously cannot interfere) can either trigger or not trigger, the emission of a poisonous gas, the outcome is impossible to know. Or rather, to find out whether the gas has been released or not it will be necessary to open the box; until it’s opened, the cat is in an indeterminate state: either alive or dead.

With this experiment Schrodinger intended not to trigger the sensibilities of cat lovers such as Ernest Hemingway; rather, the Austrian physicist wanted to show that the observation determines the result of the observation itself. And Schrödinger’s cat ended up instead becoming one of the best-known symbols of the new physics; because it epitomizes the less intuitive aspects of the theory itself. Quantum physics is a theory that purports to reveal the functioning of atomic-scale particles. Yet, the world is made up of tangible things, just as the description of physical phenomena is based on tangible observations and common experiences. Newtonian physics, and its laws, are easily experienced and seen in everyone’s daily life. But the quantum world turns intuition and reality upside down. That’s why this theory is so distant and replete with an aura of mysticism. Niels Bohr famously proclaimed: “Those who are not shocked when they first come across quantum theory cannot possibly have understood it.” And this makes the fact that the future of computing and information, or one aspect of it, is being built according to the fascinating but seemingly impenetrable discipline of quantum physics all the more curious and, of course, fascinating.

The Limits of Traditional Computers

To understand the concept of quantum computing it’s best to begin with an explanation of the limits of more ‘traditional’ information technology. Certainly, the past 30 years have been characterized by the spread of information technology—which has combined with telecommunications—to an extent that was unimaginable 40 years ago. In the mid-70s, the idea of computers becoming household items was, if not entirely unthinkable, seen as highly improbable. In the 1990s, at the dawn of the internet, personal computers started to become popular but they were not yet ubiquitous, and their use was limited compared to current iterations with their ultra-realistic graphics, immediate response, social media, entertainment, massive libraries of images, books and countless other functions. And all that, with processing abilities over 100 times more powerful than the best machines of the 90s, fits in the pocket of your jeans.

The special ingredient of the rapid evolution of information processing machines, computers in general, has been the transistor and the ability to make these smaller and more powerful. Improvements in production processes allow for the interconnections of an ever greater number of transistors into ever smaller spaces. Thus, if a 1990’s computer contained millions of transistors, they now contain, as Carl Sagan was pleased to say: billions and billions. Modern transistors are measured in nanometers, and a current example might be 10 nanometers. That’s several orders smaller than human hair. It has, therefore, become much more difficult to achieve smaller scales; indeed, the scales involved would quickly reach the atomic level, making it almost impossible to pursue the same technological evolution that has worked until now. That evolution has been described by Moore’s law: computing power—as measured by the number of transistors—doubles every 18 months. And if not already, Moore’s law (which has worked for the past 50 years) has reached its expiry date, suggesting that computing technology is close to saturation.

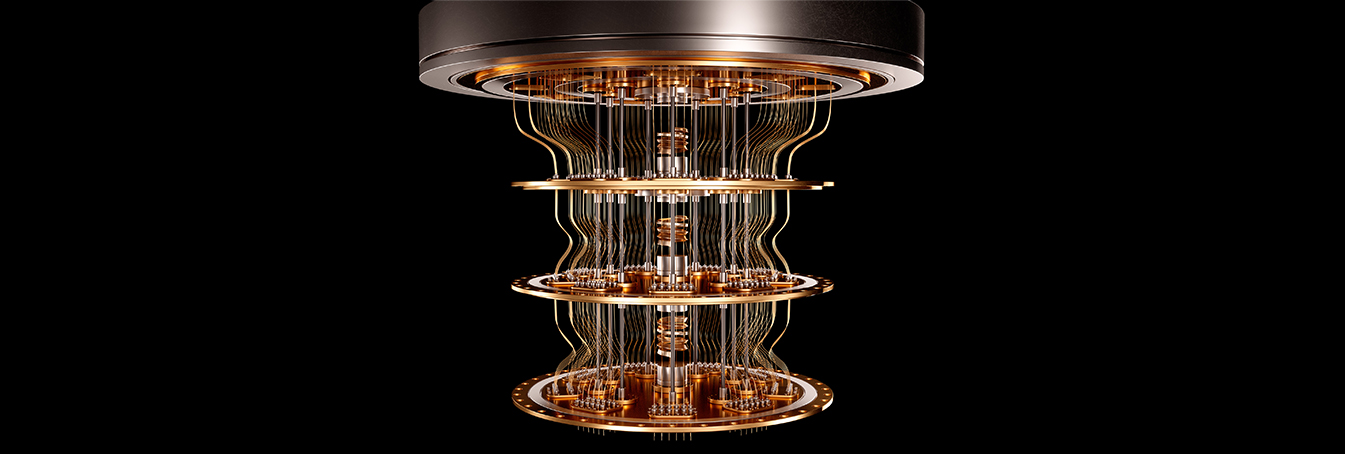

Just as new physics had to replace Newtonian physics for the study and understanding of the world at the molecular level, in the early eighties Richard Feynman proposed exploiting quantum mechanics to create the next generation of computers. However, even though more than thirty years have passed since its theoretical conception in 1982, quantum computers remain in the embryonic state and their potential is not yet fully understood even though these are intended to perform complex calculations in a much faster time than even the most advanced traditional computers. They are able, at least in theory, to perform operations that a traditional processor could never carry out, at least not in a reasonable amount of time. It is the so-called principle of quantum supremacy: in 2019 Google claimed to have achieved it with its Sycamore, which solved a very complex mathematical problem—which the most powerful computer in the world, the IBM Summit, would need some 10 thousand years to tackle—in just 3 minutes and 20 seconds. IBM is aiming to develop quantum computing, in both software and hardware, gradually such that it might become ever more relevant to the challenges of the future.

A New Paradigm

Quantum computers are based on new paradigms from those at the base of classical machines. They exploit phenomena such as the quantum superposition of states and entanglement, furthermore, they use electrons, molecules, photons, or even small electronic circuits with quantum effects. They also rely on the concept of spin, which is a rotation of the electron. Unlike classical computers, which use binary bits to represent information as 0 or 1, quantum computers use qubits, which can represent both 0 and 1 simultaneously thanks to a phenomenon called quantum superposition (information units are encoded based on the quantum state in which a particle happens to be, and particles have multiple states at the same time). And quantum superposition is what allows quantum computers to explore multiple (as opposed to binary) solutions simultaneously.

However, quantum computers are still in a very early stage of development and are subject to significant technical challenges. One major obstacle is decoherence, which occurs when qubits lose their quantum coherence due to interaction with the external environment. This makes it difficult to maintain qubit stability long enough to perform complex calculations. Despite these challenges, quantum computer research is advancing rapidly; they have the potential to revolutionize many areas, such as cryptography, molecular modeling, complex system simulation, and process optimization. However, it will still be many years before quantum computers become widespread and capable of tackling large-scale complex problems. Research and development continue to overcome current challenges and unlock the full potential of quantum computers in solving real-world problems. It should be emphasized that, at present, the calculations performed by quantum computers are only mathematical artifacts that have little to do with what the real applications of quantum computing could be, even if they prove their rapid calculation potential.

Ultimately, that’s their least interesting aspect and serves, just as a 5-year-old Wolfgang Amadeus Mozart performing on the fortepiano with a blindfold before the Pope in Rome, as a mere circus act that betrays a mere fraction of their potential. Surely, the speed confirms that quantum computers are faster than traditional ones. But, quantum computers work at the invisible or microscopic level. They are machines that could emulate nature itself and therefore help provide a far better understanding of ultra-complex systems like the climate or the logic to enable self-driving. They could even allow for the design of proteins and accelerate the development of new drugs—allowing scientists to design at the molecular level. They could truly serve as the foundation of the kind of technology that has so far only lived in the imagination of science fiction writers.

Still, at the forefront of computing technology, IBM has built an advanced quantum computer in Zurich, Switzerland. Along with Google, IBM has understood the strategic importance of developing quantum technologies. An intense competition is emerging between these two Silicon Valley giants to achieve a marketable and usable quantum computing technology. For the time being it is confined to a few units, difficult to build, which work only in highly controlled environments. To grasp the positioning of quantum computing, consider the role that the first computers had in the 1950s. Thus, over the next few decades, they should start to show their fruit.

0 Comments