Nobody can deny that the amount of data that we store and manage continues to expand at a rapid pace. A recent study by analysts at IDC Corporation concludes that the size of what they call The Global Datasphere will reach 175 zettabytes by 2025.

This data growth is being driven by many different industry trends and patterns. We see mobile usage continuing to grow unabated, while at the same time we are hooking up more devices that generate more data to the Internet of Things (IoT), we are storing more data for analysis and machine learning, creating data lakes, and copying data all over the place.

As such, organizations are looking to process, analyze, and exploit this data accurately and quickly. This is especially the case for mainframe sites, where optimizing data usage and access can result in big returns on decision-making and also big savings…

So how can organizations leverage the best data storage for each type of usage required? Well, it helps to think of the different types and usages of data at a high level. If we consider data along two axes — volatility and usage — we can map out where it makes sense to store the data… and which IBM technologies we can bring to bear to optimize that data.

From a volatility perspective, there is a continuum of possibilities from never-to-rarely changing to frequently changing. And from a usage perspective there is a continuum of possibilities ranging from mostly analytical and decision-making to transactional that conducts day-to-day business operations.

These continuums are outlined in the chart below. As you review the chart, keep in mind that transaction processing is typified by short queries that get in, do their business, and get out. Analytics processing, on the other hand, is typically going to require longer-running queries. Furthermore, the chart calls out two other types of data: reference data and temporary data.

Reference data is that which defines permissible values to be used by other data elements (columns or fields). Reference data is typically widely-used and referenced by many applications. Additionally, reference data does not change very often (hence its inclusion near the bottom of the volatility continuum on the chart). Temporary data, as the name suggests, exists for a period of time during processing, but is not stored persistently (which is why it is depicted near the top of the volatility continuum on the chart).

With this framework as our perspective, let’s dig in and look at the options shown in the center of the chart. For the most part, we assume that mainframe data will be stored in IBM Db2 for z/OS, but of course, not all mainframe data will be. For analytical processes, IBM provides the IBM Db2 Analytics Accelerator (aka IDAA).

IBM Db2 Analytics Accelerator

IDAA is a high-performance appliance for analytical processing that is tightly-integrated with Db2 for z/OS. The general idea is to enable HTAP (Hybrid Transaction Analytical Processing) from the same database, on Db2 for z/OS. IDAA stores data in a columnar format that is ideal for speeding up complex queries – sometimes by orders of magnitude.

Data that is loaded into the IDAA goes through a hashing process to map data to multiple discs and different blades. The primary purpose of spreading the data over multiple discs is to enable parallelism, where searching is performed across multiple discs (portions of the data) at the same time, resulting in efficiency gains.

Db2 for z/OS automatically decides which queries are appropriate for execution on IDAA. And when a query is run on IDAA, it is distributed across multiple blades. Each blade delivers a partial answer to the query, based on the portion of the data on its discs. The combination of each of the blades results provides the final query results.

When you think of the types of queries, that is, longer-running ones, that IDAA can boost, think of queries like the following:

SELECT something

FROM big table

WHERE suitable filter clause

SELECT something with aggregation

FROM big table

WHERE suitable filter clause

IBM Z Table Accelerator

But IDAA is not designed to help your short-running transactional queries; at least not that much. So we can turn to another IBM offering to help out here: IBM Z Table Accelerator? At a high level, IBM Z Table Accelerator is an in-memory table accelerator for Db2 and or VSAM tables that can dramatically improve overall Z application performance and reduce operational cost.

The most efficient way to access data is, of course, in-memory access. Disk access is orders-of-magnitude less efficient than access data from memory. Memory access is usually measured in microseconds, whereas disk access is measured in milliseconds. (Note that 1 millisecond equals 1000 microseconds.)

This is the case not only because disk access is mechanical and memory access is not, but because there are a lot of actions going on behind the scenes when you request an I/O. Take a look at this diagram, which comes from a Marist University white paper on mainframe I/O.

The idea here is to show the complexity of operations that are required in order to request and move data from disk to memory for access, not to explicitly walk through each of these activities. If you are interested in doing that, I refer you to the link shown for the white paper.

So an in-memory table processor, like IBM Z Table Accelerator, can be used to keep data in memory for program access to eliminate the processing and complexity of disk-based I/O operations. The benefits of IBM Z Table Accelerator are many: it can allow you to reduce resource consumption, it can help to reduce elapsed time experienced in batch windows, and it can reduce operational cost and improve system capacity.

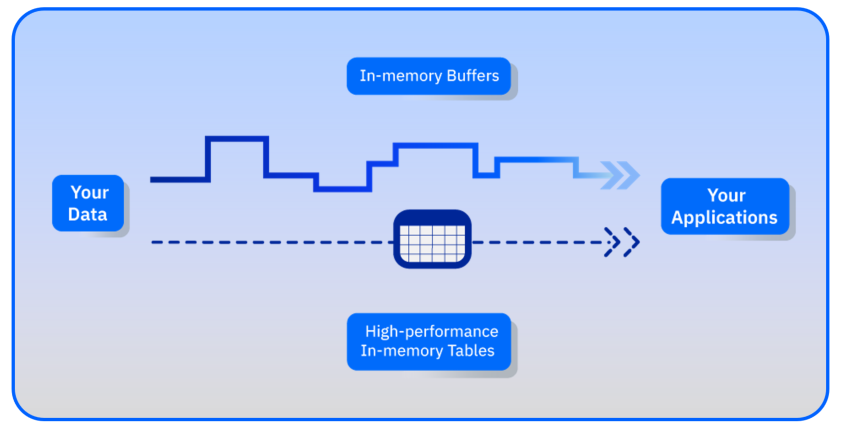

The concept is simple enough, as shown in this overview graphic (above). The IBM Z Table Accelerator is used to host reference and/or temporary data in memory, instead of on disk, to significantly improve application performance.

So let’s take a look at the difference between an I/O operation (or any fetch of data from Db2) versus accessing the data using IBM Z Table Accelerator:

The top of the diagram shows the code path required by the data request (or fetch) as it makes it way from disk through Db2 and back to the application. The bottom portion of the diagram shows the code path accessing data using IBM Z Table Accelerator. This is a significant simplification of the process and it should help to clarify how much more efficient in-memory table access can be.

The Bottom Line

If you take the time to analyze the type of data you are using, and how you are using it, you can use complementary acceleration software from IBM to optimize your application accesses. For analytical, long-running queries consider using IBM Db2 Analytics Accelerator. And for transactional processing of reference data and temporary data, consider using IBM Z Table Accelerator.

Both technologies are useful for different types of data and processing.

Originally published at The Db2 Portal.

0 Comments