Law Brakers

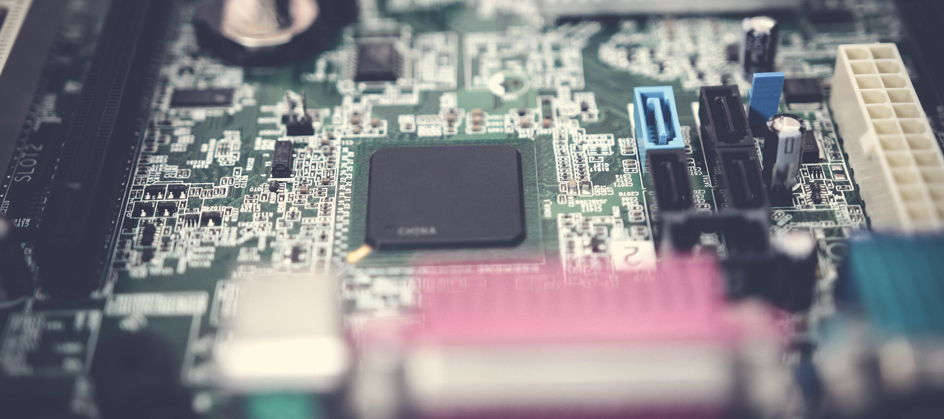

According to Wikipedia (Moore’s law), Intel co-founder Gordon Moore introduced his eponymous law in a 1965 paper that described a doubling every year in the number of components per integrated circuit. By 1975, he had already adjusted that downwards to every two years, though many people split the difference at 18 months.

Since then, we have implicitly picked up this law and applied it to CPU speed, computer prices, and density of memory, storage and pixels.

And these all have one thing in common: the laws of physics suggest there are concrete limitations to how far this “law” can go before it reaches the point of diminishing returns, Isaac Asimov’s famous story “The Last Question” notwithstanding (The Last Question, in which he suggests computing will eventually shed its limitations by transitioning to hyperspace).

FWIW, in 1995 (again, according to Wikipedia – see Wirth’s law), Pascal programming language originator Niklaus Wirth invented his own eponymous counter-law suggesting that software bloat was basically sucking up all of the capacity that Moore’s Law had given us, and then some.

All of which is to say: we can’t keep using up resources and assuming we won’t run out forever. (Hey, didn’t someone who claimed to have invented the Internet say something like that? I wonder if he was employing a similar algorithm to reach that conclusion.) So, it seems, we’ll need to find strategies for doing more with less.

Paging a Turn

Of course, this all seems to be a little bit of history repeating. Throughout human history we’ve gone through countless cycles of boom and bust, prodigality and frugality, as we realize a resource, find ways to maximize its exploitation, and then grasp at the last few twigs as it winds down and perhaps peters out.

I suppose the good news here is that no one’s saying computers are going away any time in the foreseeable future. But let’s be honest: there’s a reason we’d rather suspend than reboot our laptops, and we resist powering off and on our mobile phones. The faster they ostensibly get, the slower they actually seem to get, as we load them down with apps and every different enhancement and configuration to conform with security, regulations and consumer demand for the latest and greatest.

I remember when rebooting my Apple ][+ seemed to take an eternity. With its 140K diskette, 64K of RAM and 1 MHz 6502 processor, it seems woefully underpowered compared to modern consumer electronics, including home computers. But I never had time to go get a snack or beverage while waiting for it to start up – though maybe when waiting for a Pascal program to compile.

Of course, if you work on the mainframe, you may well point out that doing an IPL (“Initial Program Load” – a mainframe reboot) has often been a manually-intensive effort that has taken over an hour from initiating shutdown to being back up and functioning.

To which I’d reply, “Unless you use automation. I’ve done an automated IPL in less time than Windows takes to be sure it’s all the way back up and responsive.”

And there’s the rub: mainframe automation isn’t just another layer of bloatware abstracting the user from the underlying technical layers. It’s real, deliberately-designed interaction with the complete mainframe environment, optimized for every technological and business aspect of the entire platform. This is no mile-high mound of pies. It can’t be: there has never been a moment when the mainframe reached a point when it could take it easy because it had more capacity than anyone could use: businesses pay through the nose for every mainframe byte and CPU cycle, and the fact that every cost of the mainframe from hardware to applications to attached devices to support personnel is visible as a single, giant number to management means it never gets any slack.

Taut: a Lesson

Ah, scrutiny. You know: the opposite of what petty cash buys you. Of course, petty cash also has been responsible for much of modern business computing. As long as no single component – software, hardware, etc. – was over the petty cash limit, non-IT organizational units could acquire hardware and software all they wanted. And when it amounted to a parallel IT environment that required more staff to maintain than everyone dedicated to the mainframe combined, it suddenly shifted over to IT and implicitly took over that department like an invasion of tribbles.

Of course, there’s one thing a rising tide of this nature doesn’t easily lift: massive boat anchors. You know, like the mainframe was called. Bringing everyone down with insistence on scruples that no other platform could respect. The Big Iron obstacle to the ebb and flow of sizzle and trends.

And yet, those organizations that had mainframes continue to rely on them for their key corporate data and processing, even as it was used by applications across every other platform. Why? Because it worked: reliable, secure, available, and performing in a way that was conceptually on a different plane of existence.

And the reason it worked? Legacy.

OK, OK: I got that backwards, Actually “Legacy” implies “it works.” But either way, the fact is that, since before IBM announced the first modern mainframe, the System/360, on April 7, 1964, there has been no slack in the development and usage of this most cost-benefits-intensive of platforms.

The history of the development of computing before the introduction of hobby computing was always governed by the cost-benefits requirement that every resource justify its existence. That’s pretty remarkable considering the $5 Billion 1964 dollars that IBM invested in the development of the S/360.

So every organization that participated in the development of mainframe computing also watched their pennies with care, working individually and together (including at SHARE and on the CBT Tape) to find ways to maximize the value of their mainframe investments, from swapping pieces of programs between memory and disk to save on RAM costs, to monitoring CPU performance and usage to absolutely minimize waste.

Which became the culture of the mainframe, as each new cohort of mainframers learned to take care with the available resources and ensure they were maximizing their value from those resources.

So, in the 1970’s, a mere decade following the origination of the S/360, when the US Government opened the platform up to competition, the first software vendors introduced a range of solutions for maximizing the cost benefits performance of the mainframe from sorting and automation to performance management. A penny saved…

Pieces of Eight

If two bits is a quarter, does that mean a dollar is a byte? If so, the most valuable thing on Earth would be memory.

That seems relevant to George Santayana’s assertion that, “Those who cannot remember the past are condemned to repeat it.”

Ah, legacy. All the lessons learned on the mainframe… which continues to be the most cost-benefits effective business computing platform on Earth. Except people have a funny way of getting in denial about things they don’t like – even when they don’t know why they don’t like them.

Seriously: why does anyone not like the mainframe? Are they afraid of quality? Functionality? Security? Responsibility….!?

Right: no one wants a nerd telling them how to live their lives. Let’s be honest: we nerds are just too darn pedantic. As my friend Rafi Gefen reminds me: “In theory, practice and theory are the same thing. In practice, they’re not.”

So, somehow, the mainframe “green screen” got tarred with that distaste we have for the pedantic logicians in our lives, but PCs, with all their blue screens and viruses, got associated with “normal folk.” Yeah, well give me a green screen over a blue screen any day.

Unfortunately, that’s how society works. We choose comfort over exactitude. That’s why cloud is such a popular concept: it’s literally nebulous.

But at the bottom of it all, we still hope and expect that our bank accounts, insurance, health care records, government tax information, and manufacturing of all critical things like airplanes will be done so scrupulously as to cause us no concern, even if that means we forget about them.

And they are, because they’re done on a mainframe, where every byte, every CPU cycle, and every regulation and functional aspect are treated as uncompromisingly important.

Tell: Overture

That should be music to your ears. It means something out there works, securely, reliably, efficiently. And that’s all the proof you need that it’s possible.

Which brings us back to… d’oh! Yes, it’s possible to rise above the limitations of Moore’s Law being brought to the knee of its curve. And the lessons are not just “out there”: they’re smack in the middle of the world economy waiting to be re-learned by computer professionals who genuinely choose to deserve being considered professionals.

Yes, it’s possible to pay attention to the physical architecture, operating system, applications, bits and bytes and bandwidth at a level of detail that is measurable and achievable. Of course, you have to actually have the will to measure that performance. And to insist on that level of quality.

But the fact is, it’s doable, because it’s being done. Never settle for less if you truly want more.

0 Comments